Content Update Notice: This blog was written before the introduction of the Config Import feature. Follow that process to easily copy over the custom rules and OU selections from an existing AADC installation.

Resilient operations for AADConnect involves three main topics that I wanted to cover today: Health, High Availability (HA) and Upgrades.

Synchronization Health

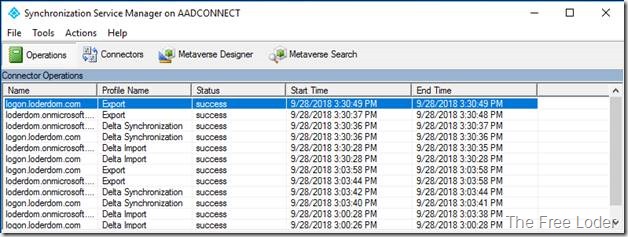

The ideal operational state for AADConnect has two characteristics for which we look. The first is that no errors occur during any stage of the sync process. This snapshot from my lab shows entire sync cycles running without error. All the import, delta syncs and exports have a status of success.

Any current version of AADConnect will have the health agent installed. The health agent will report into your Azure AD portal any data sync issues that are occurring. It’s more likely that you are working in the portal than looking at the Sync Manager, so surfacing the errors in the portal should give more visibility to any errors that exist. You need to work on clearing out any errors that exist. I’ve seen too many environments where errors are allowed to persist, and the companies wonder why they have account problems in Azure AD. Having zero errors will also make future upgrades and changes easier. We’ll see why later.

The second characteristic we want to see is AADConnect having zero disconnectors in each of the connectors. Those of you not familiar with how the sync engine works in AADConnect (or in MIM) may not know what a disconnector is. The simple answer is that a disconnector is an object in the connected directory that is in scope, yet not being managed by the sync engine. The more complete answer is described in the architecture document.

Having zero disconnectors on your Azure AD connector means that every object in Azure AD is being actively managed by the sync engine.

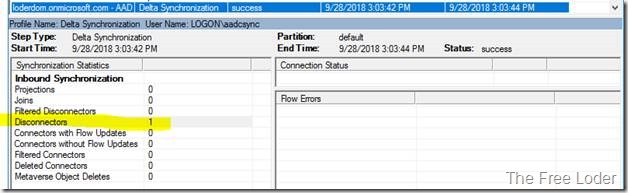

Disconnectors are reported during the Delta Sync phase for the connector.

This shows that I currently have one disconnector in Azure AD. Disconnectors in Azure AD are especially troublesome as it means nothing is managing that object in Azure AD. It will never get changed or deleted by AADConnect.

To figure out what the disconnector is we need to run the command line tool CSExport to export the disconnectors.

The syntax to use for exporting disconnectors is csexport.exe MAName ExportFileName /f:s /o:d

As an example in my lab, to get the disconnectors for the Azure AD connector I run

C:\>"C:\Program Files\Microsoft Azure AD Sync\Bin\csexport.exe" "loderdom.onmicrosoft.com - AAD" c:\temp\aaddisconnectors.xml /f:s /o:d

Microsoft Identity Integration Server Connector Space Export Utility v1.1.882.0

c 2015 Microsoft Corporation. All rights reserved

[1/1]

Successfully exported connector space to file 'c:\temp\aaddisconnectors.xml'.

You need to work your way through the XML document and figure out why each object is disconnected. Should it have been connected to something in Active Directory and isn’t? Is it an orphaned object in Azure AD that needs to be deleted? The goal is to have zero disconnectors for the Azure AD connector.

For any Active Directory connectors you have, the goal is also to have zero disconnectors, but it’s more difficult to achieve a value that is absolutely zero. This is because any container-type objects (such as the OUs that contain the users) get reported as disconnectors. So realistically, we’re looking to achieve a count of disconnectors that is low and static so that we can tell if the number of disconnectors has changed.

To minimize the number of disconnectors that occur because of the OUs, you can run through the configuration wizard and only select the OUs with users and computers that you care about, unchecking the OUs that aren’t needed.

A word of caution here. Don’t just unselect random OUs without being absolutely certain they aren’t needed. If an OU is deselected and it contained objects that were being synched into Azure AD, those objects will be deprovisioned from Azure AD. You can mitigate this risk by using a staging server to test a new configuration change before the change goes live. We’ll talk more about staging mode later.

If your AD setup is such that you have a small and static number of OUs selected, you can ideally end up with a disconnector count around 10 or so. Know what that number is for your environment, so that if it changes that means you have a new disconnector that should be reviewed and remediated. If your AD setup has lots of OUs that need to be included, and the number of OUs keeps changing (maybe you have an OU per site or department and those change frequently), you can create a custom inbound rule that will project the OUs into the metaverse. This changes the OUs into connectors and returns you to a state where the number of disconnectors should be almost zero. See Appendix A for how to create the necessary rule for connecting OUs.

In summary for this section, an AADConnect server with zero errors and zero disconnectors means we are running a well-managed environment that has no data problems affecting the sync operations of AADConnect.

High Availability: Using a Staging Server

The good news for AADConnect is that the sync engine itself is not involved with any run-time workloads for your users, reducing our HA requirements. You could shut off the AADConnect sync service and your users would still have access to all their Azure and Office 365 resources. Changes to user data (adds, deletes, changes) won’t happen, but that doesn’t affect availability in the short-term. However, depending on which sign-on option you are using, there may be additional considerations. If you are performing Password Hash Sync, that sync process runs on its own every two minutes. Users could be impacted if they change their AD password and AADConnect isn’t running; there will be a mismatch between their cloud password and their on-premise AD password. If you are performing Pass-through Authentication the first agent is installed on the AADConnect server; you need to install additional agents on other servers to provide HA for that feature. If you have configured Password Writeback then the AADConnect service needs to be running for the Service Bus channel to be open. Finally, if you use ADFS, HA designs for federated sign-on are out-of-scope for this AADConnect discussion.Accordingly, we need some measure of HA to keep the Azure AD data from becoming too stale. “Too stale” is a relative term. For a small environment with few changes that may mean you can run for weeks without AADConnect running and not experience any issues. For larger environments, you may not want to have AADConnect be down for more than a few hours.

The major HA design model for AADConnect is to have a second, fully independent installation of AADConnect that runs in staging mode. This server will see all the same data changes that happen in AD, is configured with the same rule sets as the active server and validates that the changes it expects to see in Azure AD were actually made in Azure AD by the active server. The general concept is that if both servers see the same input, and have the same rules, they will both independently arrive at the same output result. The Operation tasks topic goes into detail as to how to set up the staging server, but it neglects to cover how to realistically get the same input, the same rules and the same output on both sides. All three are problems I’ve seen in the field for implementations that have some measure of customization in the rules and, shall we say, less than pristine data sources.

Let’s start with making sure we have the same rules on both the active and staging server. To get your customizations on the staging server, you can either create them all by hand via the GUI or leverage the export capability from the active server. When you select one or more rules in the Rules Editor and select Export, you’ll get a PowerShell script generated that can almost be used to create the same rule on the staging server. The main problem with the generated PowerShell script is that it uses GUIDs to represent the connectors, and those GUIDs are unique to the AADConnect server on which they were created. The same connector will have a different GUID between the active and staging servers. But if you manually adjust the Connector GUID you’ll be able to run the script to recreate the custom rule. The PowerShell script is designed to create a new rule, not apply customizations to an existing rule. This means it’s a good reason to follow the GUI guidance when customizing a built-in rule to disable the built-in rule and create a new one to hold the customizations.

Now that we’ve attempted to configure the same rules on both sides, how do we confirm that we successfully accomplished that task? I have a PowerShell script in GitHub called Reformat AADConnect Sync Rule Export for Easy Comparisons that will use the exported PowerShell scripts and an export of the connector configuration to generate a version of the creation scripts that is designed for comparison using WinDiff or similar comparison tool. Each rule is rewritten into its own file, making it much easier to perform a rule-by-rule comparison without needing to export each rule one at a time.

When looking at the results of the comparison, you will generally find two categories of differences even when all the rules appear to have been duplicated appropriately. The first category is made up of the internal Identifier GUIDs of the rules themselves. Like the Connector GUIDs, each rule has an internal GUID that is unique per server. This difference can be ignored. The second category you’re likely to find is due to newer versions of AADConnect having different default rules than earlier versions. Newer rules usually add in new attribute flows, change calculations or introduce entirely new rules. When this occurs the precedence number for each rule can also change, resulting in more differences showing up when comparing the two different rule sets. But, after you’ve looked at a few of the difference details, you’ll notice the pattern and can quickly complete the validation that no other unexpected differences show up in the report.

Here’s an example WinDiff comparison between an active server and a staging server that was upgraded with a more recent build. We’re looking specifically at the In from AD – Group Common rule.

You can see the differences in the GUIDs (lines 3 and 11). Because this upgrade also has a new data flow, you can see additional changes to the ImmutableTag (line 14) and the new data flows (new section starting at line 170).

Please keep in mind that this comparison task is really designed to help make sure your own customizations were successfully duplicated. If there has been a change to one of the default rules in a newer version of AADConnect, like in this example, do not attempt to revert the rule back to a prior version. The newest default rules are almost always correct and represent the best default data flow for Azure AD that is currently available. But knowing that a default rule has changed is important for the following steps of getting a new staging server ready.

Next, we will want to confirm that the input data on the staging server is the same as on the active server. Going through the configuration wizard you can manually validate that the same OUs have been selected on both sides. Or you can use an export of the Connector configurations and compare the saved-ma-configuration/ma-data/ma-partition-data/partition/filter/containers sections of the XML documents. To export a Connector configuration, open the Synchronization Service GUI on the AADConnect server, navigate to the Connectors tab, highlight a connector and select Export Connector from the Actions menu. Provide a File name and click Save.

Finally, and most importantly, is to validate that the same output results are occurring on both the active and staging servers. This is done by examining the export queues on the staging server. When in staging mode the one and only difference from active mode is that no export steps are executed.

Let’s have a quick discussion on how export data is created. In AADConnect, when a synchronization step is executed, the inbound rules from that specific connector and all outbound rules for all connectors are executed against each object being synced. The results of the sync task are compared to AADConnect’s current view of the connected data source (from the last time the object was imported) and any differences in data flows due to changed input values or different rules are computed and stored in the export queue. When the export step happens, the pending exports are written to the connected data source. However, because we’re in staging mode, the export step doesn’t happen and all the changes that AADConnect wants to make are nicely kept in the export queue for us to review.

Now that we know how the exports are constructed, we can see what happens in the ideal case. In the ideal case, the staging server has all the same input data from AD as the active server; it has the exact same rules; and it calculated the exact same final state of the objects as they already exist in Azure AD. Therefore, it has no changes that it wants to apply to Azure AD, so the export queue is empty. When the export queue is empty, the staging server is ideally situated to take over for the active server as it will immediately pick up from where the active server left off.

Unfortunately, the production systems I usually see are far from ideal. They have errors that are present, they have disconnectors that are present, and they have high volumes of changes being processed through them. This means the export queue on the staging server is almost never empty. Therefore, we need to analyze the export report to figure out why the staging server thinks that it has data that it wants to change.

To improve our analysis ability, we need to make sure we’ve accomplished the health status items we talked about earlier. We need to eliminate errors and minimize the disconnectors in the active server. Then we can go about trying to remove the noise that is due to transient data changes that are flowing though the active server and eventually seen by the staging server. To do this we can compare the pending exports between the active server and the staging server at two different points in time. By putting a delay in the comparison, we can ensure that the staging server has seen the changes that the active server was in process of making. The first pass generates a list of potential changes that we care about. If those same changes are still present a few hours later, after several sync cycles have run, then we can be sure the changes are not in the queue simply due to an in-process change.

We end up needing a total of four exports for each Connector to run this comparison. That’s one each from the active and staging servers to start with, then a second set of exports from both a few hours later after some sync cycles have run. Generating an export file is a two-step process. We first look at the pending exports by returning to csexport.exe with syntax of csexport.exe MAName ExportFileName /f:x. After generating the XML document of the pending exports, we convert that to CSV using the CSExportAnalyzer with syntax like CSExportAnalyzer ExportFileName.xml > %temp%\export.csv

In my lab, one instance of those commands looks like this:

C:\Program Files\Microsoft Azure AD Sync\Bin>csexport.exe "loderdom.onmicrosoft.com - AAD" c:\temp\aadexport.xml /f:x

Microsoft Identity Integration Server Connector Space Export Utility v1.2.65.0

c 2015 Microsoft Corporation. All rights reserved

[1/1]

Successfully exported connector space to file 'c:\temp\aadexport.xml'.

C:\Program Files\Microsoft Azure AD Sync\Bin>CSExportAnalyzer.exe c:\temp\aadexport.xml > c:\temp\aadexport.csv

From this final report, if there are unexpected exports sitting in the export queue of the staging server, we know they are due to one of the two things already discussed. Changes to the rules can result in a significant amount of new data being staged for export to Azure AD, especially when the updated rule is flowing new default data that wasn’t being exported in previous versions of AADConnect. Changes to the input data can result in different sets of objects being prepared for export.

While the report will tell us what is different, it doesn’t directly tell us why. Fully analyzing the system to understand why the data is different is beyond the scope of this blog post. But hopefully we’ve produced enough useful artifacts to help you discover the source.

In summary for this section, a staging server is used to provide HA capabilities to AADConnect but building a staging server and getting it properly configured can be difficult. I’ve highlighted the main data elements we need to be managing to ensure the staging server has good data on which to operate. And I’ve laid out a methodology for comparing a staging server to an active server, so we can be aware of where differences occur. Finally, we have a way to validate the changes the staging server wants to make to the connected systems before cutting over and before any changes are made.

Upgrades

There are two ways to manage AADConnect upgrades. The first is to simply perform an in-place upgrade on the existing active server by running the new installer. It will make whatever changes it wants to make to the rules and will export the results to Azure AD. You can even automate the upgrade process by enabling the Automatic Upgrade feature. My recommendation for automatic upgrades is to use this method only if you’ve done no customizations to the rules.The second way is to make use of the staging server for a swing migration. Operationally, it will be identical to the process we already went through to build out the staging server in the first place. Run the upgrade first on the staging server. Perform the comparisons to ensure no unexpected changes were introduced in the upgrade process. If you’re comfortable with the data sitting in the export queue, proceed with putting the current active server into staging mode, then put the new staging server into active mode and it will start exporting the changes it was holding in its export queue. If all the changes processed without causing any errors, then you can upgrade the first server to bring it up to the same level as the newly active server. Now you’ve fully swapped between the active and staging servers.

I hope you’ve found this discussion useful and have a better understanding of how to manage your AADConnect infrastructure to provide the best possible foundation for your hybrid Azure experience.

Appendix A: Connecting OUs

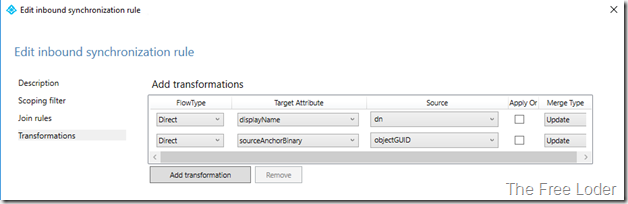

First, we need an object type in the metaverse to represent OUs. In the Sync Manager, go to the Metaverse Designer, create a new object and call it organizationalUnit. For the attributes, include displayName and sourceAnchorBinary.

In the Rules Editor, create a new Inbound rule. The connector is your AD forest, the precedent must be a unique, non-zero number (I picked 90). The object type is organizationalUnit. The Link Type is Provision. For the Join rules, include objectGUID = sourceAnchorBinary. For the transformations, include direct objectGUID to sourceAnchorBinary and direct dn to displayName.

Updated 2020.04.03 moved links to the GitHub Repository